Wow. What a day! And what a story!

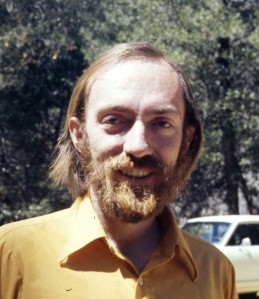

Kip Thorne in 1972, around the time MTW was completed.

It is hard for me to believe, but I have been on the Caltech faculty for nearly a third of a century. And when I arrived in 1983, interferometric detection of gravitational waves was already a hot topic of discussion here. At Kip Thorne’s urging, Ron Drever had been recruited to Caltech and was building the 40-meter prototype interferometer (which is still operating as a testbed for future detection technologies). Kip and his colleagues, spurred by Vladimir Braginsky’s insights, had for several years been actively studying the fundamental limits of quantum measurement precision, and how these might impact the search for gravitational waves.

I decided to bone up a bit on the subject, so naturally I pulled down from my shelf the “telephone book” — Misner, Thorne, and Wheeler’s mammoth Gravitation — and browsed Chapter 37 (Detection of Gravitational Wave), for which Kip had been the lead author. The chapter brimmed over with enthusiasm for the subject, but to my surprise interferometers were hardly mentioned. Instead the emphasis was on mechanical bar detectors. These had been pioneered by Joseph Weber, whose efforts in the 1960s had first aroused Kip’s interest in detecting gravitational waves, and by Braginsky.

I sought Kip out for an explanation, and with characteristic clarity and patience he told how his views had evolved. He had realized in the 1970s that a strain sensitivity of order would be needed for a good chance at detection, and after many discussions with colleagues like Drever, Braginsky, and Rai Weiss, he had decided that kind of sensitivity would not be achievable with foreseeable technology using bars.

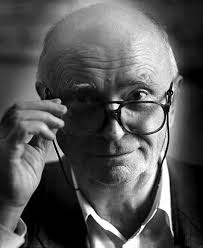

Ron Drever, who built Caltech’s 40-meter prototype interferometer in the 1980s.

We talked about what would be needed — a kilometer scale detector capable of sensing displacements of meters. I laughed. As he had many times by then, Kip told why this goal was not completely crazy, if there is enough light in an interferometer, which bounces back and forth many times as a waveform passes. Immediately after the discussion ended I went to my desk and did some crude calculations. The numbers kind of worked, but I shook my head, unconvinced. This was going to be a huge undertaking. Success seemed unlikely. Poor Kip!

I’ve never been involved in LIGO, but Kip and I remained friends, and every now and then he would give me the inside scoop on the latest developments (most memorably while walking the streets of London for hours on a beautiful spring evening in 1991). From afar I followed the forced partnership between Caltech and MIT that was forged in the 1980s, and the painful transition from a small project under the leadership of Drever-Thorne-Weiss (great scientists but lacking much needed management expertise) to a large collaboration under a succession of strong leaders, all based at Caltech.

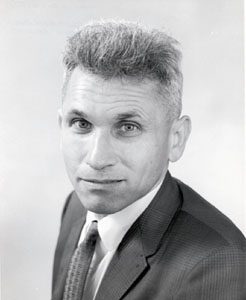

Vladimir Braginsky, who realized that quantum effects limit the sensitivity of gravitational wave detectors.

During 1994-95, I co-chaired a committee formulating a long-range plan for Caltech physics, and we spent more time talking about LIGO than any other issue. Part of our concern was whether a small institution like Caltech could absorb such a large project, which was growing explosively and straining Institute resources. And we also worried about whether LIGO would ultimately succeed. But our biggest worry of all was different — could Caltech remain at the forefront of gravitational wave research so that if and when LIGO hit paydirt we would reap the scientific benefits?

A lot has changed since then. After searching for years we made two crucial new faculty appointments: theorist Yanbei Chen (2007), who provided seminal ideas for improving sensitivity, and experimentalist Rana Adhikari (2006), a magician at the black art of making an interferometer really work. Alan Weinstein transitioned from high energy physics to become a leader of LIGO data analysis. We established a world-class numerical relativity group, now led by Mark Scheel. Staff scientists like Stan Whitcomb also had an essential role, as did longtime Project Manager Gary Sanders. LIGO Directors Robbie Vogt, Barry Barish, Jay Marx, and now Dave Reitze have provided effective and much needed leadership.

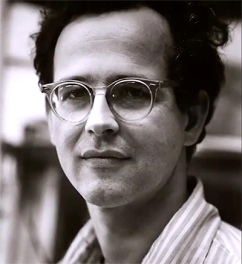

Rai Weiss, around the time he conceived LIGO in an amazing 1972 paper.

My closest connection to LIGO arose during the 1998-99 academic year, when Kip asked me to participate in a “QND reading group” he organized. (QND stands for Quantum Non-Demolition, Braginsky’s term for measurements that surpass the naïve quantum limits on measurement precision.) At that time we envisioned that Advanced LIGO would turn on in 2008, yet there were still many questions about how it would achieve the sensitivity required to ensure detection. I took part enthusiastically, and learned a lot, but never contributed any ideas of enduring value. The discussions that year did have positive outcomes, however; leading for example to a seminal paper by Kimble, Levin, Matsko, Thorne, and Vyatchanin on improving precision through squeezing of light. By the end of the year I had gained a much better appreciation of the strength of the LIGO team, and had accepted that Advanced LIGO might actually work!

I once asked Vladimir Braginsky why he spent years working on bar detectors for gravitational waves, while at the same time realizing that fundamental limits on quantum measurement would make successful detection very unlikely. Why wasn’t he trying to build an interferometer already in the 1970s? Braginsky loved to be asked questions like this, and his answer was a long story, told with many dramatic flourishes. The short answer is that he viewed interferometric detection of gravitational waves as too ambitious. A bar detector was something he could build in his lab, while an interferometer of the appropriate scale would be a long-term project involving a much larger, technically diverse team.

Joe Weber, whose audacious belief that gravitational waves are detectable on earth inspired Kip Thorne and many others.

Kip’s chapter in MTW ends with section 37.10 (“Looking toward the future”) which concludes with this juicy quote (written almost 45 years ago):

“The technical difficulties to be surmounted in constructing such detectors are enormous. But physicists are ingenious; and with the impetus provided by Joseph Weber’s pioneering work, and with the support of a broad lay public sincerely interested in pioneering in science, all obstacles will surely be overcome.”

That’s what we call vision, folks. You might also call it cockeyed optimism, but without optimism great things would never happen.

Optimism alone is not enough. For something like the detection of gravitational waves, we needed technical ingenuity, wise leadership, lots and lots of persistence, the will to overcome adversity, and ultimately the efforts of hundreds of hard working, talented scientists and engineers. Not to mention the courage displayed by the National Science Foundation in supporting such a risky project for decades.

I have never been prouder than I am today to be part of the Caltech family.

![(a) Two entangled Posner clusters. Each dot is a P-31 nuclear spin, and each dashed line represents a singlet pair. (b) Many entangled Posner clusters. [From the paper]](https://quantumfrontiers.files.wordpress.com/2015/11/fisher-figure.jpg?w=584&h=219)