One day, early this spring, I found myself in a hotel elevator with three other people. The cohort consisted of two theoretical physicists, one computer scientist, and what appeared to be a normal person. I pressed the elevator’s 4 button, as my husband (the computer scientist) and I were staying on the hotel’s fourth floor. The button refused to light up.

“That happened last time,” the normal person remarked. He was staying on the fourth floor, too.

The other theoretical physicist pressed the 3 button.

“Should we press the 5 button,” the normal person continued, “and let gravity do its work?”

I took a moment to realize that he was suggesting we ascend to the fifth floor and then induce the elevator to fall under gravity’s influence to the fourth. We were reaching floor three, so I exchanged a “have a good evening” with the other physicist, who left. The door shut, and we began to ascend.

“As it happens,” I remarked, “he’s an expert on gravity.” The other physicist was Herman Verlinde, a professor at Princeton.

Such is a side effect of visiting the Simons Center for Geometry and Physics. The Simons Center graces the Stony Brook University campus, which was awash in daffodils and magnolia blossoms last month. The Simons Center derives its name from hedge-fund manager Jim Simons (who passed away during the writing of this article). He achieved landmark physics and math research before earning his fortune on Wall Street as a quant. Simons supported his early loves by funding the Simons Center and other scientific initiatives. The center reminded me of the Perimeter Institute for Theoretical Physics, down to the café’s linen napkins, so I felt at home.

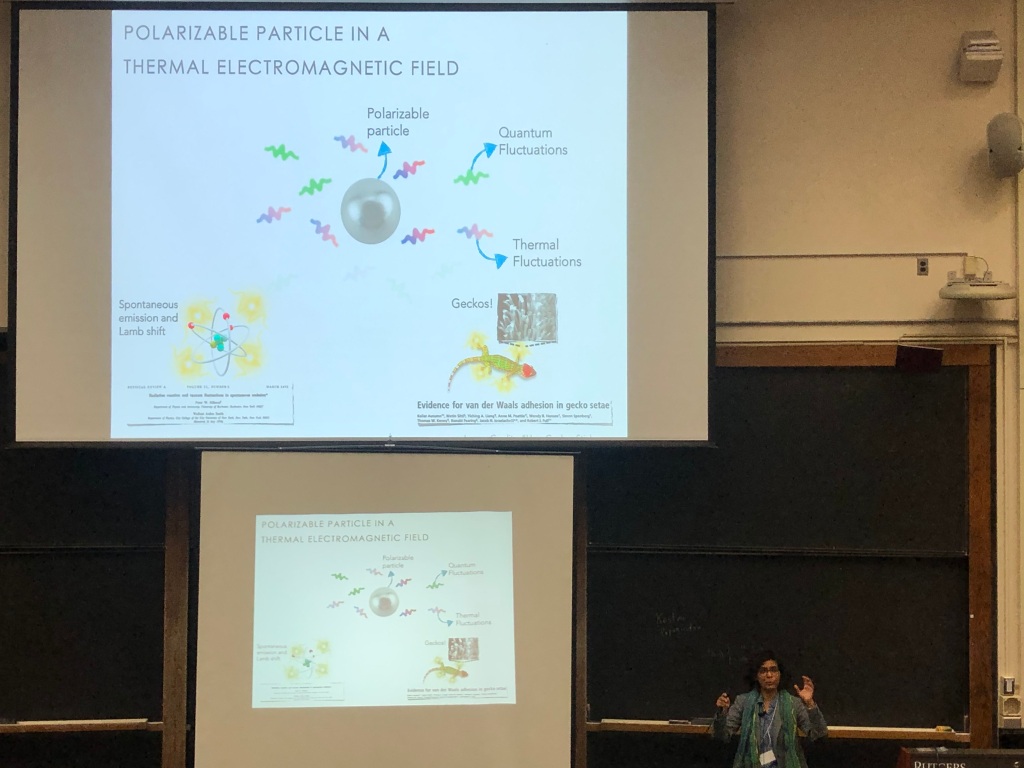

I was participating in the Simons Center workshop “Entanglement, thermalization, and holography.” It united researchers from quantum information and computation, black-hole physics and string theory, quantum thermodynamics and many-body physics, and nuclear physics. We were to share our fields’ approaches to problems centered on thermalization, entanglement, quantum simulation, and the like. I presented about the eigenstate thermalization hypothesis, which elucidates how many-particle quantum systems thermalize. The hypothesis fails, I argued, if a system’s dynamics conserve quantities (analogous to energy and particle number) that can’t be measured simultaneously. Herman Verlinde discussed the ER=EPR conjecture.

My PhD advisor, John Preskill, blogged about ER=EPR almost exactly eleven years ago. Read his blog post for a detailed introduction. Briefly, ER=EPR posits an equivalence between wormholes and entanglement.

The ER stands for Einstein–Rosen, as in Einstein–Rosen bridge. Sean Carroll provided the punchiest explanation I’ve heard of Einstein–Rosen bridges. He served as the scientific advisor for the 2011 film Thor. Sean suggested that the film feature a wormhole, a connection between two black holes. The filmmakers replied that wormholes were passé. So Sean suggested that the film feature an Einstein–Rosen bridge. “What’s an Einstein–Rosen bridge?” the filmmakers asked. “A wormhole.” So Thor features an Einstein–Rosen bridge.

EPR stands for Einstein–Podolsky–Rosen. The three authors published a quantum paradox in 1935. Their EPR paper galvanized the community’s understanding of entanglement.

ER=EPR is a conjecture that entanglement is closely related to wormholes. As Herman said during his talk, “You probably need entanglement to realize a wormhole.” Or any two maximally entangled particles are connected by a wormhole. The idea crystallized in a paper by Juan Maldacena and Lenny Susskind. They drew on work by Mark Van Raamsdonk (who masterminded the workshop behind this Quantum Frontiers post) and Brian Swingle (who’s appeared in further posts).

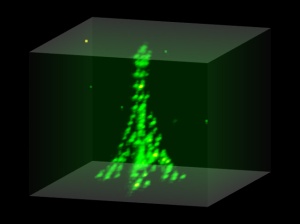

Herman presented four pieces of evidence for the conjecture, as you can hear in the video of his talk. One piece emerges from the AdS/CFT duality, a parallel between certain space-times (called anti–de Sitter, or AdS, spaces) and quantum theories that have a certain symmetry (called conformal field theories, or CFTs). A CFT, being quantum, can contain entanglement. One entangled state is called the thermofield double. Suppose that a quantum system is in a thermofield double and you discard half the system. The remaining half looks thermal—we can attribute a temperature to it. Evidence indicates that, if a CFT has a temperature, then it parallels an AdS space that contains a black hole. So entanglement appears connected to black holes via thermality and temperature.

Despite the evidence—and despite the eleven years since John’s publication of his blog post—ER=EPR remains a conjecture. Herman remarked, “It’s more like a slogan than anything else.” His talk’s abstract contains more hedging than a suburban yard. I appreciated the conscientiousness, a college acquaintance having once observed that I spoke carefully even over sandwiches with a friend.

A “source of uneasiness” about ER=EPR, to Herman, is measurability. We can’t check whether a quantum state is entangled via any single measurement. We have to prepare many identical copies of the state, measure the copies, and process the outcome statistics. In contrast, we seem able to conclude that a space-time is connected without measuring multiple copies of the space-time. We can check that a hotel’s first floor is connected to its fourth, for instance, by riding in an elevator once.

Or by riding an elevator to the fifth floor and descending by one story. My husband, the normal person, and I took the stairs instead of falling. The hotel fixed the elevator within a day or two, but who knows when we’ll fix on the truth value of ER=EPR?

With thanks to the conference organizers for their invitation, to the Simons Center for its hospitality, to Jim Simons for his generosity, and to the normal person for inspiration.