Another conference about entropy. Another graveyard.

Last year, I blogged about the University of Cambridge cemetery visited by participants in the conference “Eddington and Wheeler: Information and Interaction.” We’d lectured each other about entropy–a quantification of decay, of the march of time. Then we marched to an overgrown graveyard, where scientists who’d lectured about entropy decades earlier were decaying.

This July, I attended the conference “Beyond i.i.d. in information theory.” The acronym “i.i.d.” stands for “independent and identically distributed,” which requires its own explanation. The conference took place at BIRS, the Banff International Research Station, in Canada. Locals pronounce “BIRS” as “burrs,” the spiky plant bits that stick to your socks when you hike. (I had thought that one pronounces “BIRS” as “beers,” over which participants in quantum conferences debate about the Measurement Problem.) Conversations at “Beyond i.i.d.” dinner tables ranged from mathematical identities to the hiking for which most tourists visit Banff to the bears we’d been advised to avoid while hiking. So let me explain the meaning of “i.i.d.” in terms of bear attacks.

The BIRS conference center. Beyond here, there be bears.

Suppose that, every day, exactly one bear attacks you as you hike in Banff. Every day, you have a probability p1 of facing down a black bear, a probability p2 of facing down a grizzly, and so on. These probabilities form a distribution {pi} over the set of possible events (of possible attacks). We call the type of attack that occurs on a given day a random variable. The distribution associated with each day equals the distribution associated with each other day. Hence the variables are identically distributed. The Monday distribution doesn’t affect the Tuesday distribution and so on, so the distributions are independent.

Information theorists quantify efficiencies with which i.i.d. tasks can be performed. Suppose that your mother expresses concern about your hiking. She asks you to report which bear harassed you on which day. You compress your report into the fewest possible bits, or units of information. Consider the limit as the number of days approaches infinity, called the asymptotic limit. The number of bits required per day approaches a function, called the Shannon entropy HS, of the distribution:

Number of bits required per day → HS({pi}).

The Shannon entropy describes many asymptotic properties of i.i.d. variables. Similarly, the von Neumann entropy HvN describes many asymptotic properties of i.i.d. quantum states.

But you don’t hike for infinitely many days. The rate of black-bear attacks ebbs and flows. If you stumbled into grizzly land on Friday, you’ll probably avoid it, and have a lower grizzly-attack probability, on Saturday. Into how few bits can you compress a set of nonasymptotic, non-i.i.d. variables?

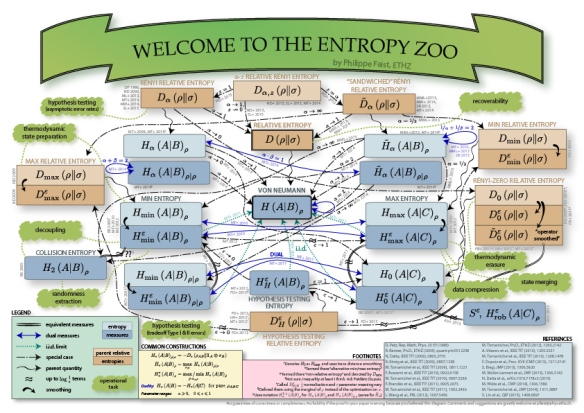

We answer such questions in terms of ɛ-smooth α-Rényi entropies, the sandwiched Rényi relative entropy, the hypothesis-testing entropy, and related beasts. These beasts form a zoo diagrammed by conference participant Philippe Faist. I wish I had his diagram on a placemat.

“Beyond i.i.d.” participants define these entropies, generalize the entropies, probe the entropies’ properties, and apply the entropies to physics. Want to quantify the efficiency with which you can perform an information-processing task or a thermodynamic task? An entropy might hold the key.

Many highlights distinguished the conference; I’ll mention a handful. If the jargon upsets your stomach, skip three paragraphs to Thermodynamic Thursday.

Aram Harrow introduced a resource theory that resembles entanglement theory but whose agents pay to communicate classically. Why, I interrupted him, define such a theory? The backstory involves a wager against quantum-information pioneer Charlie Bennett (more precisely, against an opinion of Bennett’s). For details, and for a quantum version of The Princess and the Pea, watch Aram’s talk.

Graeme Smith and colleagues “remove[d] the . . . creativity” from proofs that certain entropic quantities satisfy subadditivity. Subadditivity is a property that facilitates proofs and that offers physical insights into applications. Graeme & co. designed an algorithm for checking whether entropic quantity Q satisfies subadditivity. Just add water; no innovation required. How appropriate, conference co-organizer Mark Wilde observed. BIRS has the slogan “Inspiring creativity.”

Patrick Hayden applied one-shot entropies to AdS/CFT and emergent spacetime, enthused about elsewhere on this blog. Debbie Leung discussed approximations to Haar-random unitaries. Gilad Gour compared resource theories.

Conference participants graciously tolerated my talk about thermodynamic resource theories. I closed my eyes to symbolize the ignorance quantified by entropy. Not really; the photo didn’t turn out as well as hoped, despite the photographer’s goodwill. But I could have closed my eyes to symbolize entropic ignorance.

Thermodynamics and resource theories dominated Thursday. Thermodynamics is the physics of heat, work, entropy, and stasis. Resource theories are simple models for transformations, like from a charged battery and a Tesla car at the bottom of a hill to an empty battery and a Tesla atop a hill.

My advisor’s Tesla. No wonder I study thermodynamic resource theories.

Philippe Faist, diagrammer of the Entropy Zoo, compared two models for thermodynamic operations. I introduced a generalization of resource theories for thermodynamics. Last year, Joe Renes of ETH and I broadened thermo resource theories to model exchanges of not only heat, but also particles, angular momentum, and other quantities. We calculated work in terms of the hypothesis-testing entropy. Though our generalization won’t surprise Quantum Frontiers diehards, the magic tricks in my presentation might.

At twilight on Thermodynamic Thursday, I meandered down the mountain from the conference center. Entropies hummed in my mind like the mosquitoes I slapped from my calves. Rising from scratching a bite, I confronted the Banff Cemetery. Half-wild greenery framed the headstones that bordered the gravel path I was following. Thermodynamicists have associated entropy with the passage of time, with deterioration, with a fate we can’t escape. I seem unable to escape from brushing past cemeteries at entropy conferences.

Not that I mind, I thought while scratching the bite in Pasadena. At least I escaped attacks by Banff’s bears.

With thanks to the conference organizers and to BIRS for the opportunity to participate in “Beyond i.i.d. 2015.”

Pingback: Bits, Bears, and Beyond in Banff: Part Deux | Quantum Frontiers

Pingback: What matters to me, and why? | Quantum Frontiers

Pingback: Bringing the heat to Cal State LA | Quantum Frontiers

Pingback: Upending my equilibrium | Quantum Frontiers

Pingback: The sign problem(s) | Quantum Frontiers

Pingback: Decoding (the allure of) the apparent horizon | Quantum Frontiers

Pingback: Rock-paper-scissors, granite-clock-idea | Quantum Frontiers

Pingback: Catching up with the quantum-thermo crowd | Quantum Frontiers

Pingback: Chasing Ed Jaynes’s ghost | Quantum Frontiers

Pingback: Long live Yale’s cemetery | Quantum Frontiers

Pingback: What distinguishes quantum thermodynamics from quantum statistical mechanics? | Quantum Frontiers

Pingback: Quantum conflict resolution | Quantum Frontiers

Pingback: The paper that begged for a theme song | Quantum Frontiers

Pingback: Sense, sensibility, and superconductors | Quantum Frontiers

Pingback: Eleven risks of marrying a quantum information scientist | Quantum Frontiers

Pingback: Love in the time of thermo | Quantum Frontiers

Pingback: Life among the experimentalists | Quantum Frontiers

Pingback: Memories of things past | Quantum Frontiers