In the previous posts in this series, I described how using lessons I learned from Richard Feynman and John Preskill led me to become a more popular TA.

What I learned from a few others*

If by some miracle I ever get to be a professor, there will be a few others I look to for teaching wisdom, some of which I’ve already made use of.

When it comes to writing problem sets, I would look to the two physicists who write the best problem sets I’ve ever seen: Kip Thorne and Andreas Ludwig. In my opinion, a problem set should not be something that just makes you apply the things you learned in class to other examples that are essentially the same as things you’ve seen before. The best problems make you work through an exciting new topic that the professor doesn’t have time to cover in lecture. I remember sitting in my office looking out at one of the 4 km long arms of the LIGO Hanford Observatory, where I was working during the summer after my sophomore year at Caltech, while working through some of Kip’s homework problems because those were some of the best resources I could find to teach me how the amazing contraption really works.1 And Ludwig probably has the best problems of all. The first problem set from one of his classes on many-body field theory, a pretty typical one, consists of two problems written over five pages along with six pages of appendices attached. Those problems sets looked daunting, but they really weren’t. Once you read through everything and thought carefully about it, the problems weren’t so bad and you ended up deriving some really cool results!2

Finally, I’ll describe some things that I learned from Ed McCaffery who brilliantly dealt with the challenging job of teaching humanities at Caltech by accomplishing two goals. First, he “tricked” us into finding a “boring” subject interesting. Second, he had a great sense of who his audience was and taught us in a way that we would actually be receptive to once he had our attention. He accomplished the first goal by making his lectures extremely humorous and ridiculous, but in such a way that they were actually filled with content. He accomplished the second by boiling down the subject to a few key principles and essentially deriving the rest of the ideas from these while ignoring all of the technical details (it was almost as if we were in a physics or math class). The main reason I kept going to his classes was to be entertained—which is especially impressive seeing as how I would normally consider law to be a terribly boring subject—but I accidentally ended up learning a lot. Of all the hums I took at Caltech, his two classes are the ones I remember the most from to this day, and I know I’m not alone in this regard.

If I’m ever put in the challenging position of, for example, teaching an introductory physics course to students, maybe primarily premeds for illustrative purposes, who have no desire to learn it and are being forced to take it to satisfy some requirement, I would try to accomplish the same two goals that McCaffery did in what was essentially an equivalent scenario. It would be hard to be as funny as McCaffery, but maybe I could figure out some other way of being sufficiently ridiculous to “trick” the students into caring about the class. On the other hand, with a little experimentation and a willingness to ignore what I was told to do, I bet it wouldn’t be too hard to find a way to teach some physics in a way that these students could relate to and maybe actually remember something from after the class was over. In a bigger school like UCSB—where not everyone is going to be either a scientist, a mathematician, or an engineer and there are several segregated levels of introductory physics courses—I’ve often asked, “Why are we teaching premeds how to calculate the trajectory of a cannon ball, the moment of inertia of a disk, or the efficiency of a heat engine in quantitative detail?” It’s pretty clear that most of them aren’t going to care about any of this, and they’re really not going to need to know how to do any of that after they pass the class anyway. So is it really that shocking that they tend to go through the class with the attitude that they’re just going to do what it takes to get a good grade instead of with the attitude that they’d like to learn some science? I believe this is roughly equivalent to trying to teach Caltech undergrads the intricacies of the tax code, in the way you might teach USC law students, which I’m pretty sure wouldn’t be a huge success.

The first thing I would try would be to teach physics in a back-of-an-envelope kind of way, ignoring any rigor and just trying to get a feel for how powerful of a tool physics, and really science in general, can be by applying it to problems that I thought the students would be interested in or find amusing. For example, I might explain how using a simple scaling argument shows that the height that most animals can jump, regardless of their size, is roughly universal (and, by observations, happens to be on the order of half a meter). Or maybe I’d explain how some basic knowledge of material properties allows you to estimate how the maximum height of a mountain depends on the properties of a planet—and when you plug the numbers in for the earth, you basically get the height of Mt. Everest.3 You could probably even do this in such a way that every example and every problem would actually be relevant to what premeds might use later in their careers. And maybe the most important part is that I know I would learn a lot from doing this—it could even be completely different if taught multiple times—and so I would actually be excited about the material and would be motivated to explain it well.

Figure 4.1: Maybe the most famous back-of-an-envelope calculation of all time. Oops, we added a scale bar and a time stamp. With a little dimensional analysis and some physical intuition, anyone can now estimate how powerful our nuclear bomb is.

And if that didn’t work, I’d hope that in talking with the students and getting a sense of what was important to them, I would be able to come up with a different approach that would be successful. I simply don’t believe that it’s impossible to find a way to reach every kind of student, whether they be aspiring scientists, mathematicians, engineers, doctors, lawyers, historians, poets, artists, or politicians. Physics is just too exciting of a subject for that to be true, but you’ve got to know your audience. (Maybe McCaffery feels the same way about law and economics for all I know.)

Closing thoughts

There are two memories in particular of interactions with students that will always mean more to me than the teaching award itself because they remind me of how I felt interacting with John. After we were leaving a particularly good lunch lecture,4 I remember Steve grinning from ear to ear telling everyone within sight about how awesome the lecture was. In a similar situation after one of my quantum field theory lectures,5 I was standing in the hallway answering a student’s question, but out of the corner of my eye I could see a small group of students with similar grins as Steve’s and overheard them saying that these were some of the best recitation sections they had ever been to. The other time was when a student came to my office hours and asked if he could ask me a question about some random advanced physics topic he was reading about because he really wanted to understand it well and wanted to hear what I had to say about it. That student probably had no idea how good that made me feel because that’s exactly how I felt anytime I asked John a question.

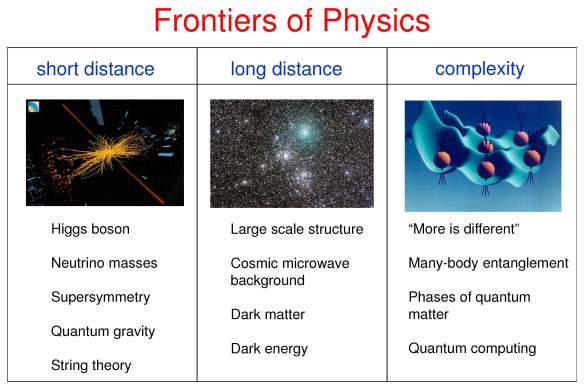

Looking back over my notes for the field theory course, I feel like I didn’t actually do that good of a job overall, though I am happy that the students seemed to enjoy it and learned a lot from it. There are some things I am very proud of. Probably the biggest one was my last lecture on the Casimir effect which included a digression on what it means for something to be “renormalizable” or “non-renormalizable” and how there is absolutely nothing wrong with the second kind in the context of effective field theories.6 After an introduction to the philosophy of effective field theories (see footnote 6 for an excellent reference), that discussion mostly included the classic pictures, found in Figure 4.2, of the standard model superseding Fermi theory followed by string theory superseding the effective field theory of gravity,7 though I also very briefly mentioned that neutrino masses and proton decay could be understood by similar arguments.

Figure 4.2: The top three images are snapshots from my 5.5 page digression on renormalizability. The bottom image is an excerpt from notes that I frantically scribbled down after returning to my office at the IQI after John’s lecture on the same topic. The above-mentioned “regurgitation” should be clear.

But the lectures taken as a whole were too technical for the intended audience, unlike my phase transition lecture. At the time I was giving the lectures, I was working through Weinberg’s books on QFT (and GR) and was very excited about his non-standard approach which seemed especially elegant to me.8 I think I let my excitement about Weinberg creep into some of my lectures without properly toning down the math9 as is most clearly illustrated by my attempt to explain the spin-statistics theorem. That could be much better explained to the intended audience with pictures along the lines of Feynman’s discussion in Elementary Particles and the Laws of Physics or John’s comments in his chapter on anyons.10 If I have the opportunity to teach ever again, I’ll try to do an even better John Preskill imitation, maybe perturbing it slightly with further wisdom gained from others over the years.

*This section lies somewhat out of the blog’s main line of development, and may be omitted in a first reading.

- The problems used to be available here under the link to Gravitational Waves (Ph237, 2002), but the link appears to be broken now. Maybe someone at Caltech can fix it. [Update: The link has been fixed.] It was a really great resource which also included all of the video of Kip—another great lecturer with lots to learn from—teaching the course. If Kip hadn’t gone emeritus to start a career in Hollywood right after my freshman year, it’s possible that I would have been trying to imitate him instead of John. The very first thought I had when I opened my Caltech admissions letter was, “Yes! Now I’m going to get to learn GR from Kip Thorne!” It didn’t work out that way, but I still had a pretty awesome time there. ↩

- In classic Ludwig fashion, the few words telling you what you are actually expected to do for the assignment are written in bold. I actually already stole Ludwig’s style when I was given the opportunity to write an extra credit problem on magnetic monopoles for the electrodynamics class, a problem I wrote based on John’s excellent review article. (I know that at least one of my students actually read John’s paper after doing the problem because he asked me questions about it afterwards.) ↩

- I was first exposed to these things by Sterl Phinney in Caltech’s Physics 101 class. Here is a draft of a nice textbook on this material, here is another one in a similar vein, and here are some problems by Purcell. ↩

- It was originally a lecture on tachyons which somehow ended with John explaining how the CMB spontaneously breaks Lorentz invariance. While the end of that lecture was especially awesome, the main part proved particularly valuable when I took string theory in grad school two years later since it is still the best explanation of what tachyons really are—and why they’re not that scary or mysterious—that I’ve ever seen or heard. The right two diagrams in Figure 2.1 of the second post (showing dispersion relations for ordinary and tachyonic particles and tachyon condensation in a Higgs-like potential) are actually the pictures John drew to describe this. While ordinary massive particles have a group velocity that’s zero at zero momentum and approaches the speed of light from below as the momentum is increased, tachyons have a formally infinite group velocity that approaches the speed of light from above. But all this is saying is that you expanded about an unstable vacuum; there’s nothing scary like causality violation or a breakdown of special relativity. ↩

-

I think it was the lecture where I explained how being stubborn about promoting the global U(1) symmetry that the Dirac equation (the equation governing the dynamics of electrons and positrons) naturally posses to a local U(1) symmetry forces you to add a photon and write down Maxwell’s equations. I had insomnia for a week when I learned that from Sergei Gukov as a junior at Caltech. Unfortunately I don’t know of a good reference for this explained in this way, so here are my notes for that class—the real fun starts on page 3. (Note that I was careful not to go through all the details of the math on the board and just highlighted the results enough to tell a good story.)

However, I do have some excellent references for essentially the same result told from a slightly different point of view which includes gravitons (the spin-2 generalization of the spin-1 photons) as well as the spin-1 gauge bosons: two papers by Weinberg here and here (see also Chapters 2, 5, and 13 of The Quantum Theory of Fields), The Feynman Lectures on Gravitation, and a paper by Wald. For a good introduction and overview of all of this literature, along with lots more references and details, see comments by John and Kip here. For the awesome Weinberg approach, see these awesome lectures by Freddy Cachazo and Nima Arkani-Hamed (all four of them which also include a lot of extensions and many failed attempts to avoid what seems to be the inevitable). (I have never seen the generalization to non-abelian gauge fields made explicit except by Cachazo or Arkani-Hamed. Also, if you’re having trouble getting through Chapter 2 of The Quantum Theory of Fields, a pretty necessary prerequisite for understanding a lot of Weinberg’s arguments, the first two lectures by Cachazo in that series make a huge dent, and the last two make a good dent in getting through Georgi.)

The main point of a lot of these references is that if you include special relativity, quantum mechanics, and a massless spin-1 particle, you are essentially forced to write down Maxwell’s equations. If you do the same thing but for a spin-2 particle, you are essentially forced to write down Einstein’s equations. You have no choice; you have to do it! (Appropriate caveats about effective field theories apply.) You also find that you can’t do anything non-trivial (at least at low energies and under a few other technical assumptions) if you have a massless particle of spin greater than 2. See also here for another approach to a similar result. I had another week-long bout of insomnia when I discovered these references around the time I was actually teaching this course. ↩ - I felt like this was an especially important lesson for my students to learn since they were using Bjorken and Drell as a textbook. It might be a classic, but it’s a dated classic and certainly doesn’t explain Ken Wilson’s incredible insights. See this paper for a very nice, and at one point humorous, discussion by Joe Polchinski that should be required reading for anyone before they take a QFT class. (Here is the more technical paper on the topic that Joe is really famous for.) ↩

- See here and here for complementary introductions to thinking about gravity as an effective field theory and here for a more comprehensive review. See also this take by Weinberg which also discusses the possibility that the effective field theory of gravity is asymptotically safe and that that’s all there is. Weinberg presents a similar argument in this lecture where he says, “If I had to bet, I would bet that’s not the case….My bet would be something on string theory. I’m not against string theory. I just want to raise this as a possibility we shouldn’t forget.” ↩

-

I think Weinberg’s books often get an unfair rap. The three volumes in The Quantum Theory of Fields speak for themselves. They’re certainly not for beginners, but once you’ve gotten through several other books first, it’s a really great way to look at things. (See the links to Freddy Cachazo in footnote 5 if you want some help getting through some early parts that seem to deter a lot of people from ever really trying to read Weinberg.)

But his GR book is one of my all-time favorites (though the cosmology parts are a bit old and so you should consult his newer book or even his awesome popular science book on that subject). It starts by taking the results discussed in footnote 5 seriously and viewing the (strong) equivalence principle as a theorem, rather than a postulate, derived from quantum field theory from which the rest of general relativity can be derived. (A mathematician may not call it a theorem, but I’m a physicist.) All of the same equations you would find in the other GR books that use the more traditional geometric approach are there, it’s just that different words are used to describe them. The following quote by Weinberg nicely summarizes the approach taken in the book (as well as explaining why I encounter so much resistance to it seeing as how I’m surrounded by “many general relativists”):

…the geometric interpretation of the theory of gravitation has dwindled to a mere analogy, which lingers in our language in terms like “metric,” “affine connection,” and “curvature,” but is not otherwise very useful. The important thing is to be able to make predictions…and it simply doesn’t matter whether we ascribe these predictions to the physical effect of gravitational fields on the motion of planets and photons or to a curvature of space and time. (The reader should be warned that these views are heterodox and would be met with objections from many general relativists.)

But I love this point of view because it lays the groundwork for putting gravity in it’s place as “just” another effective field theory. Despite what you may have been led to believe, gravity and quantum mechanics actually do play nice together, for a while at least until you try to push them too close and all of the disasters that you’ve heard about kick in that signal the need for new physics (see Figure 4.2 again). To quote Joe, “Nobody ever promised you a rose garden” (see footnote 6), but this seems like at least a nice field of grass to me. See footnote 7 for more on this view. ↩ - In my opinion, “toning down the math” is one of the easiest mistakes to make, and the hardest to avoid, when teaching to any audience, even your own peers. It can be very tempting to go through an argument in all the gory details, but this is when, at least for me when I’m watching a talk, you start to lose the audience. Besides, no one is really going to believe you unless they reproduce the results for themselves with their own pencil (or piece of chalk), so you might as well tell a good story and leave the dotting of the is and the crossing of the ts “as an exercise for the listener.” Now whenever I prepare a talk, I try to take into account who the audience will be, and think to myself, “Remember, try to say it like John would.” I’m still not that great at it, but I think I’m getting better. ↩

-

Everyone should read John’s comments. I’m embarrassed to admit it, but I did get the “quite misleading” impression discussed on page 10. This even caused great confusion when I asserted some wrong things in a condensed matter student talk, but since none of my colleagues were able to isolate my mistake clearly, or at least communicate it to me, I think this misleading impression is quite widespread.

I think the point is that the standard proofs of the spin-statistics theorem (at least the ones given in Weinberg, Srednicki, and Streater and Wightman) show that special relativity and quantum mechanics require you to add antiparticles and to quantize with bosonic statistics for integer spins and fermionic statistics for half-integer spins. This is completely correct—and really cool!—but as John points out, all that is necessary for the spin-statistics connection is the existence of antiparticles. In QFT, you see that you need antiparticles and then immediately need to quantize them “properly,” but it’s easy to conflate these two steps. It also didn’t help that I was explicitly told many times that you need relativistic quantum mechanics to understand the spin-statistics connection. Unfortunately, I repeated this partial lie to my own students. ↩