Last Monday was an exciting day!

After following the BICEP2 announcement via Twitter, I had to board a transcontinental flight, so I had 5 uninterrupted hours to think about what it all meant. Without Internet access or references, and having not thought seriously about inflation for decades, I wanted to reconstruct a few scraps of knowledge needed to interpret the implications of r ~ 0.2.

I did what any physicist would have done … I derived the basic equations without worrying about niceties such as factors of 3 or  . None of what I derived was at all original — the theory has been known for 30 years — but I’ve decided to turn my in-flight notes into a blog post. Experts may cringe at the crude approximations and overlooked conceptual nuances, not to mention the missing references. But some mathematically literate readers who are curious about the implications of the BICEP2 findings may find these notes helpful. I should emphasize that I am not an expert on this stuff (anymore), and if there are serious errors I hope better informed readers will point them out.

. None of what I derived was at all original — the theory has been known for 30 years — but I’ve decided to turn my in-flight notes into a blog post. Experts may cringe at the crude approximations and overlooked conceptual nuances, not to mention the missing references. But some mathematically literate readers who are curious about the implications of the BICEP2 findings may find these notes helpful. I should emphasize that I am not an expert on this stuff (anymore), and if there are serious errors I hope better informed readers will point them out.

By tradition, careless estimates like these are called “back-of-the-envelope” calculations. There have been times when I have made notes on the back of an envelope, or a napkin or place mat. But in this case I had the presence of mind to bring a notepad with me.

Notes from a plane ride

According to inflation theory, a nearly homogeneous scalar field called the inflaton (denoted by  ) filled the very early universe. The value of

) filled the very early universe. The value of  varied with time, as determined by a potential function

varied with time, as determined by a potential function  . The inflaton rolled slowly for a while, while the dark energy stored in

. The inflaton rolled slowly for a while, while the dark energy stored in  caused the universe to expand exponentially. This rapid cosmic inflation lasted long enough that previously existing inhomogeneities in our currently visible universe were nearly smoothed out. What inhomogeneities remained arose from quantum fluctuations in the inflaton and the spacetime geometry occurring during the inflationary period.

caused the universe to expand exponentially. This rapid cosmic inflation lasted long enough that previously existing inhomogeneities in our currently visible universe were nearly smoothed out. What inhomogeneities remained arose from quantum fluctuations in the inflaton and the spacetime geometry occurring during the inflationary period.

Gradually, the rolling inflaton picked up speed. When its kinetic energy became comparable to its potential energy, inflation ended, and the universe “reheated” — the energy previously stored in the potential  was converted to hot radiation, instigating a “hot big bang”. As the universe continued to expand, the radiation cooled. Eventually, the energy density in the universe came to be dominated by cold matter, and the relic fluctuations of the inflaton became perturbations in the matter density. Regions that were more dense than average grew even more dense due to their gravitational pull, eventually collapsing into the galaxies and clusters of galaxies that fill the universe today. Relic fluctuations in the geometry became gravitational waves, which BICEP2 seems to have detected.

was converted to hot radiation, instigating a “hot big bang”. As the universe continued to expand, the radiation cooled. Eventually, the energy density in the universe came to be dominated by cold matter, and the relic fluctuations of the inflaton became perturbations in the matter density. Regions that were more dense than average grew even more dense due to their gravitational pull, eventually collapsing into the galaxies and clusters of galaxies that fill the universe today. Relic fluctuations in the geometry became gravitational waves, which BICEP2 seems to have detected.

Both the density perturbations and the gravitational waves have been detected via their influence on the inhomogeneities in the cosmic microwave background. The 2.726 K photons left over from the big bang have a nearly uniform temperature as we scan across the sky, but there are small deviations from perfect uniformity that have been precisely measured. We won’t worry about the details of how the size of the perturbations is inferred from the data. Our goal is to achieve a crude understanding of how the density perturbations and gravitational waves are related, which is what the BICEP2 results are telling us about. We also won’t worry about the details of the shape of the potential function  , though it’s very interesting that we might learn a lot about that from the data.

, though it’s very interesting that we might learn a lot about that from the data.

Exponential expansion

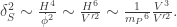

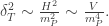

Einstein’s field equations tell us how the rate at which the universe expands during inflation is related to energy density stored in the scalar field potential. If a(t) is the “scale factor” which describes how lengths grow with time, then roughly

.

.

Here  means the time derivative of the scale factor, and

means the time derivative of the scale factor, and  GeV is the Planck scale associated with quantum gravity. (G is Newton’s gravitational constant.) I’ve left our a factor of 3 on purpose, and I used the symbol ~ rather than = to emphasize that we are just trying to get a feel for the order of magnitude of things. I’m using units in which Planck’s constant

GeV is the Planck scale associated with quantum gravity. (G is Newton’s gravitational constant.) I’ve left our a factor of 3 on purpose, and I used the symbol ~ rather than = to emphasize that we are just trying to get a feel for the order of magnitude of things. I’m using units in which Planck’s constant  and the speed of light c are set to one, so mass, energy, and inverse length (or inverse time) all have the same dimensions. 1 GeV means one billion electron volts, about the mass of a proton.

and the speed of light c are set to one, so mass, energy, and inverse length (or inverse time) all have the same dimensions. 1 GeV means one billion electron volts, about the mass of a proton.

(To persuade yourself that this is at least roughly the right equation, you should note that a similar equation applies to an expanding spherical ball of radius a(t) with uniform mass density V. But in the case of the ball, the mass density would decrease as the ball expands. The universe is different — it can expand without diluting its mass density, so the rate of expansion  does not slow down as the expansion proceeds.)

does not slow down as the expansion proceeds.)

During inflation, the scalar field  and therefore the potential energy

and therefore the potential energy  were changing slowly; it’s a good approximation to assume

were changing slowly; it’s a good approximation to assume  is constant. Then the solution is

is constant. Then the solution is

where  , the Hubble constant during inflation, is

, the Hubble constant during inflation, is

To explain the smoothness of the observed universe, we require at least 50 “e-foldings” of inflation before the universe reheated — that is, inflation should have lasted for a time at least  .

.

Slow rolling

During inflation the inflaton  rolls slowly, so slowly that friction dominates inertia — this friction results from the cosmic expansion. The speed of rolling

rolls slowly, so slowly that friction dominates inertia — this friction results from the cosmic expansion. The speed of rolling  is determined by

is determined by

Here  is the slope of the potential, so the right-hand side is the force exerted by the potential, which matches the frictional force on the left-hand side. The coefficient of

is the slope of the potential, so the right-hand side is the force exerted by the potential, which matches the frictional force on the left-hand side. The coefficient of  has to be

has to be  on dimensional grounds. (Here I have blown another factor of 3, but let’s not worry about that.)

on dimensional grounds. (Here I have blown another factor of 3, but let’s not worry about that.)

Density perturbations

The trickiest thing we need to understand is how inflation produced the density perturbations which later seeded the formation of galaxies. There are several steps to the argument.

Quantum fluctuations of the inflaton

As the universe inflates, the inflaton field is subject to quantum fluctuations, where the size of the fluctuation depends on its wavelength. Due to inflation, the wavelength increases rapidly, like  , and once the wavelength gets large compared to

, and once the wavelength gets large compared to  , there isn’t enough time for the fluctuation to wiggle — it gets “frozen in.” Much later, long after the reheating of the universe, the oscillation period of the wave becomes comparable to the age of the universe, and then it can wiggle again. (We say that the fluctuations “cross the horizon” at that stage.) Observations of the anisotropy of the microwave background have determined how big the fluctuations are at the time of horizon crossing. What does inflation theory say about that?

, there isn’t enough time for the fluctuation to wiggle — it gets “frozen in.” Much later, long after the reheating of the universe, the oscillation period of the wave becomes comparable to the age of the universe, and then it can wiggle again. (We say that the fluctuations “cross the horizon” at that stage.) Observations of the anisotropy of the microwave background have determined how big the fluctuations are at the time of horizon crossing. What does inflation theory say about that?

Well, first of all, how big are the fluctuations when they leave the horizon during inflation? Then the wavelength is  and the universe is expanding at the rate

and the universe is expanding at the rate  , so

, so  is the only thing the magnitude of the fluctuations could depend on. Since the field

is the only thing the magnitude of the fluctuations could depend on. Since the field  has the same dimensions as

has the same dimensions as  , we conclude that fluctuations have magnitude

, we conclude that fluctuations have magnitude

From inflaton fluctuations to density perturbations

Reheating occurs abruptly when the inflaton field reaches a particular value. Because of the quantum fluctuations, some horizon volumes have larger than average values of  and some have smaller than average values; hence different regions reheat at slightly different times. The energy density in regions that reheat earlier starts to be reduced by expansion (“red shifted”) earlier, so these regions have a smaller than average energy density. Likewise, regions that reheat later start to red shift later, and wind up having larger than average density.

and some have smaller than average values; hence different regions reheat at slightly different times. The energy density in regions that reheat earlier starts to be reduced by expansion (“red shifted”) earlier, so these regions have a smaller than average energy density. Likewise, regions that reheat later start to red shift later, and wind up having larger than average density.

When we compare different regions of comparable size, we can find the typical (root-mean-square) fluctuations  in the reheating time, knowing the fluctuations in

in the reheating time, knowing the fluctuations in  and the rolling speed

and the rolling speed  :

:

Small fractional fluctuations in the scale factor  right after reheating produce comparable small fractional fluctuations in the energy density

right after reheating produce comparable small fractional fluctuations in the energy density  . The expansion rate right after reheating roughly matches the expansion rate

. The expansion rate right after reheating roughly matches the expansion rate  right before reheating, and so we find that the characteristic size of the density perturbations is

right before reheating, and so we find that the characteristic size of the density perturbations is

The subscript hor serves to remind us that this is the size of density perturbations as they cross the horizon, before they get a chance to grow due to gravitational instabilities. We have found our first important conclusion: The density perturbations have a size determined by the Hubble constant  and the rolling speed

and the rolling speed  of the inflaton, up to a factor of order one which we have not tried to keep track of. Insofar as the Hubble constant and rolling speed change slowly during inflation, these density perturbations have a strength which is nearly independent of the length scale of the perturbation. From here on we will denote this dimensionless scale of the fluctuations by

of the inflaton, up to a factor of order one which we have not tried to keep track of. Insofar as the Hubble constant and rolling speed change slowly during inflation, these density perturbations have a strength which is nearly independent of the length scale of the perturbation. From here on we will denote this dimensionless scale of the fluctuations by  , where the subscript

, where the subscript  stands for “scalar”.

stands for “scalar”.

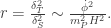

Perturbations in terms of the potential

Putting together  and

and  with our expression for

with our expression for  , we find

, we find

The observed density perturbations are telling us something interesting about the scalar field potential during inflation.

Gravitational waves and the meaning of r

The gravitational field as well as the inflaton field is subject to quantum fluctuations during inflation. We call these tensor fluctuations to distinguish them from the scalar fluctuations in the energy density. The tensor fluctuations have an effect on the microwave anisotropy which can be distinguished in principle from the scalar fluctuations. We’ll just take that for granted here, without worrying about the details of how it’s done.

While a scalar field fluctuation with wavelength  and strength

and strength  carries energy density

carries energy density  , a fluctuation of the dimensionless gravitation field

, a fluctuation of the dimensionless gravitation field  with wavelength

with wavelength  and strength

and strength  carries energy density

carries energy density  . Applying the same dimensional analysis we used to estimate

. Applying the same dimensional analysis we used to estimate  at horizon crossing to the rescaled field

at horizon crossing to the rescaled field  , we estimate the strength

, we estimate the strength  of the tensor fluctuations (the fluctuations of

of the tensor fluctuations (the fluctuations of  ) as

) as

From observations of the CMB anisotropy we know that  , and now BICEP2 claims that the ratio

, and now BICEP2 claims that the ratio

is about  at an angular scale on the sky of about one degree. The conclusion (being a little more careful about the O(1) factors this time) is

at an angular scale on the sky of about one degree. The conclusion (being a little more careful about the O(1) factors this time) is

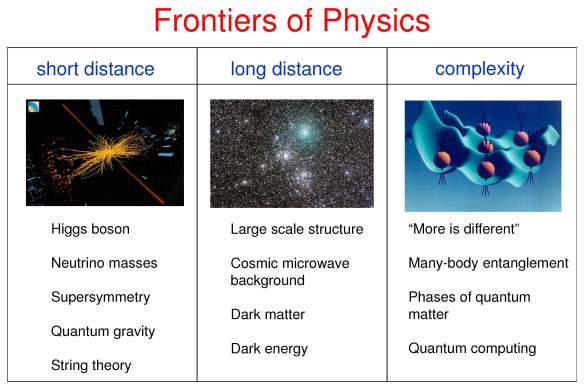

This is our second important conclusion: The energy density during inflation defines a mass scale, which turns our to be  for the observed value of

for the observed value of  . This is a very interesting finding because this mass scale is not so far below the Planck scale, where quantum gravity kicks in, and is in fact pretty close to theoretical estimates of the unification scale in supersymmetric grand unified theories. If this mass scale were a factor of 2 smaller, then

. This is a very interesting finding because this mass scale is not so far below the Planck scale, where quantum gravity kicks in, and is in fact pretty close to theoretical estimates of the unification scale in supersymmetric grand unified theories. If this mass scale were a factor of 2 smaller, then  would be smaller by a factor of 16, and hence much harder to detect.

would be smaller by a factor of 16, and hence much harder to detect.

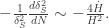

Rolling, rolling, rolling, …

Using  , we can express

, we can express  as

as

It is convenient to measure time in units of the number  of e-foldings of inflation, in terms of which we find

of e-foldings of inflation, in terms of which we find

Now, we know that for inflation to explain the smoothness of the universe we need  larger than 50, and if we assume that the inflaton rolls at a roughly constant rate during

larger than 50, and if we assume that the inflaton rolls at a roughly constant rate during  e-foldings, we conclude that, while rolling, the change in the inflaton field is

e-foldings, we conclude that, while rolling, the change in the inflaton field is

This is our third important conclusion — the inflaton field had to roll a long, long, way during inflation — it changed by much more than the Planck scale! Putting in the O(1) factors we have left out reduces the required amount of rolling by about a factor of 3, but we still conclude that the rolling was super-Planckian if  . That’s curious, because when the scalar field strength is super-Planckian, we expect the kind of effective field theory we have been implicitly using to be a poor approximation because quantum gravity corrections are large. One possible way out is that the inflaton might have rolled round and round in a circle instead of in a straight line, so the field strength stayed sub-Planckian even though the distance traveled was super-Planckian.

. That’s curious, because when the scalar field strength is super-Planckian, we expect the kind of effective field theory we have been implicitly using to be a poor approximation because quantum gravity corrections are large. One possible way out is that the inflaton might have rolled round and round in a circle instead of in a straight line, so the field strength stayed sub-Planckian even though the distance traveled was super-Planckian.

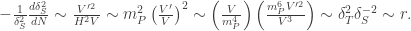

Spectral tilt

As the inflaton rolls, the potential energy, and hence also the Hubble constant  , change during inflation. That means that both the scalar and tensor fluctuations have a strength which is not quite independent of length scale. We can parametrize the scale dependence in terms of how the fluctuations change per e-folding of inflation, which is equivalent to the change per logarithmic length scale and is called the “spectral tilt.”

, change during inflation. That means that both the scalar and tensor fluctuations have a strength which is not quite independent of length scale. We can parametrize the scale dependence in terms of how the fluctuations change per e-folding of inflation, which is equivalent to the change per logarithmic length scale and is called the “spectral tilt.”

To keep things simple, let’s suppose that the rate of rolling is constant during inflation, at least over the length scales for which we have data. Using  , and assuming

, and assuming  is constant, we estimate the scalar spectral tilt as

is constant, we estimate the scalar spectral tilt as

Using  , we conclude that the tensor spectral tilt is half as big.

, we conclude that the tensor spectral tilt is half as big.

From  , we find

, we find

and using  we find

we find

Putting in the numbers more carefully we find a scalar spectral tilt of  and a tensor spectral tilt of

and a tensor spectral tilt of  .

.

This is our last important conclusion: A relatively large value of  means a significant spectral tilt. In fact, even before the BICEP2 results, the CMB anisotropy data already supported a scalar spectral tilt of about .04, which suggested something like

means a significant spectral tilt. In fact, even before the BICEP2 results, the CMB anisotropy data already supported a scalar spectral tilt of about .04, which suggested something like  . The BICEP2 detection of the tensor fluctuations (if correct) has confirmed that suspicion.

. The BICEP2 detection of the tensor fluctuations (if correct) has confirmed that suspicion.

Summing up

If you have stuck with me this far, and you haven’t seen this stuff before, I hope you’re impressed. Of course, everything I’ve described can be done much more carefully. I’ve tried to convey, though, that the emerging story seems to hold together pretty well. Compared to last week, we have stronger evidence now that inflation occurred, that the mass scale of inflation is high, and that the scalar and tensor fluctuations produced during inflation have been detected. One prediction is that the tensor fluctuations, like the scalar ones, should have a notable spectral tilt, though a lot more data will be needed to pin that down.

I apologize to the experts again, for the sloppiness of these arguments. I hope that I have at least faithfully conveyed some of the spirit of inflation theory in a way that seems somewhat accessible to the uninitiated. And I’m sorry there are no references, but I wasn’t sure which ones to include (and I was too lazy to track them down).

It should also be clear that much can be done to sharpen the confrontation between theory and experiment. A whole lot of fun lies ahead.

Added notes (3/25/2014):

Okay, here’s a good reference, a useful review article by Baumann. (I found out about it on Twitter!)

From Baumann’s lectures I learned a convenient notation. The rolling of the inflaton can be characterized by two “potential slow-roll parameters” defined by

Both parameters are small during slow rolling, but the relationship between them depends on the shape of the potential. My crude approximation ( ) would hold for a quadratic potential.

) would hold for a quadratic potential.

We can express the spectral tilt (as I defined it) in terms of these parameters, finding  for the tensor tilt, and

for the tensor tilt, and  for the scalar tilt. To derive these formulas it suffices to know that

for the scalar tilt. To derive these formulas it suffices to know that  is proportional to

is proportional to  , and that

, and that  is proportional to

is proportional to  ; we also use

; we also use

keeping factors of 3 that I left out before. (As a homework exercise, check these formulas for the tensor and scalar tilt.)

It is also easy to see that  is proportional to

is proportional to  ; it turns out that

; it turns out that  . To get that factor of 16 we need more detailed information about the relative size of the tensor and scalar fluctuations than I explained in the post; I can’t think of a handwaving way to derive it.

. To get that factor of 16 we need more detailed information about the relative size of the tensor and scalar fluctuations than I explained in the post; I can’t think of a handwaving way to derive it.

We see, though, that the conclusion that the tensor tilt is  does not depend on the details of the potential, while the relation between the scalar tilt and

does not depend on the details of the potential, while the relation between the scalar tilt and  does depend on the details. Nevertheless, it seems fair to claim (as I did) that, already before we knew the BICEP2 results, the measured nonzero scalar spectral tilt indicated a reasonably large value of

does depend on the details. Nevertheless, it seems fair to claim (as I did) that, already before we knew the BICEP2 results, the measured nonzero scalar spectral tilt indicated a reasonably large value of  .

.

Once again, we’re lucky. On the one hand, it’s good to have a robust prediction (for the tensor tilt). On the other hand, it’s good to have a handle (the scalar tilt) for distinguishing among different inflationary models.

One last point is worth mentioning. We have set Planck’s constant  equal to one so far, but it is easy to put the powers of

equal to one so far, but it is easy to put the powers of  back in using dimensional analysis (we’ll continue to assume the speed of light c is one). Since Newton’s constant

back in using dimensional analysis (we’ll continue to assume the speed of light c is one). Since Newton’s constant  has the dimensions of length/energy, and the potential

has the dimensions of length/energy, and the potential  has the dimensions of energy/volume, while

has the dimensions of energy/volume, while  has the dimensions of energy times length, we see that

has the dimensions of energy times length, we see that

Thus the production of gravitational waves during inflation is a quantum effect, which would disappear in the limit  . Likewise, the scalar fluctuation strength

. Likewise, the scalar fluctuation strength  is also

is also  , and hence also a quantum effect.

, and hence also a quantum effect.

Therefore the detection of primordial gravitational waves by BICEP2, if correct, confirms that gravity is quantized just like the other fundamental forces. That shouldn’t be a surprise, but it’s nice to know.